Uppa Hand

I built a fully automated web application to generate useful property reports for first home buyers.

Year

2025

Role

Product Design, Build

Opportunity

House hunting in this market sucks. As a Gen Z I wouldn’t actually know, but anecdotally it’s a lot of research, hopes, dreams, calculation, and praying to your chosen deity to actually be in with a chance, all to be squandered in the opening bid by a property developer during a rainy Saturday-morning auction you travelled an hour to attend.

Ouch. Could we shortcut that heartbreaking process for first/second home buyers whilst delivering some level of confidence & comfort? Utilising the newest tech available in mid-2025, I think so.

While basic property data is readily available, high-quality analysis covering market trends, local development, and buyer competition is slow and expensive to produce. It also goes against the ethos of report providers (i.e. property groups; banks) to offer advice on negotiation, including when to bid and when to walk away. Thus the challenge was to design a system that could not only automate deep property research but also deliver it in a format that was both valuable and user-friendly to a less-experienced property hunting audience.

In terms of dollars, the behavioural economics of mental accounting tells us that if you’re allocating $1.5M+ to the purchase of a house, then $50 to gain additional confidence & peace of mind comes out in the wash. Given the LLM calls totalled $2.20 AUD per report; this would make the project commercially viable.

The stage is set. Let’s break a leg.

My Role

Working under the JK Labs umbrella, and as the Product Manager, Designer, and Developer, I owned the project from initial concept to launch.

- •Product Strategy & Vision: I conducted the initial market and competitive analysis, defined the core value proposition, and created the product requirements document (PRD) that guided development, using a MOSCOW feature ranking system.

- •System Architecture & Design: I designed the end-to-end user journey and architected the multi-agent AI workflow, selecting the optimal technology & iterating on chained-prompts to meet the product goals.

- •UX & Prototyping: I developed the Information Architecture, wireframes & content for the look & feel of the front-end application.

- •Development: I “vibe coded” the functional MVP to validate the core user experience using modern web frameworks. (note: if vibe coding works for former Telsa Autopilot engineers [cc Andrej Karpathy] then it’s good enough for me.)

- •Launch & Go-to-Market: I managed the end-to-end launch process, including setting up the necessary infrastructure for payments and report delivery, implementing security and essential logs. The product is live and ready for marketing efforts.

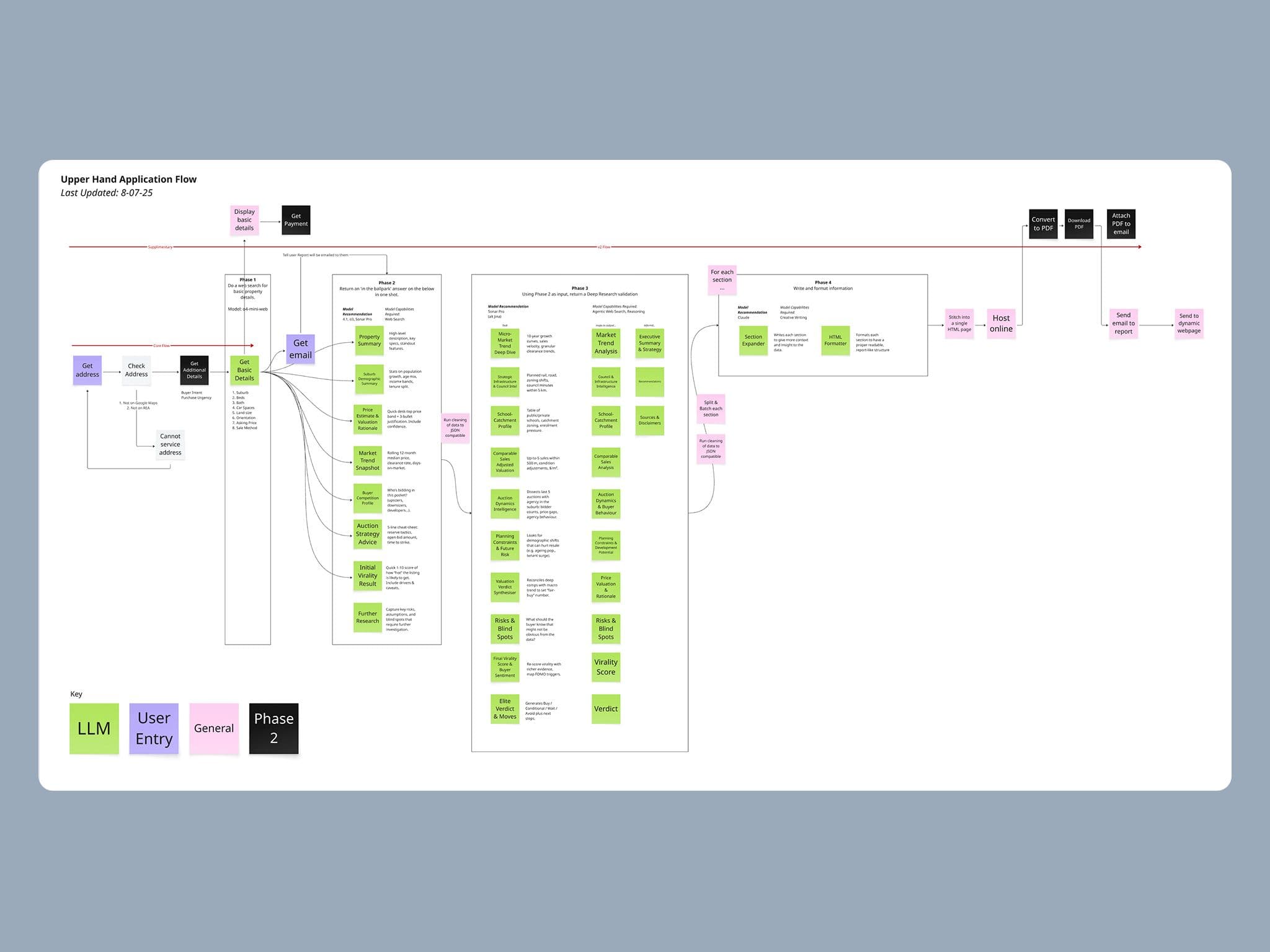

Mapping the system flow

Approach / Process

1. Gap Analysis

I began by analyzing existing free and paid property reports to identify gaps in the market and define what a "best-in-class" automated report should contain. “Free” property reports provided by lenders gave only figures and no actual analysis, while paid reports went slightly deeper into financial details but had no actual, actionable insights or recommendations; the user must take the data and intuit themselves. This was the gap to close for a first/second home buyer.

2. Determining Feature Set

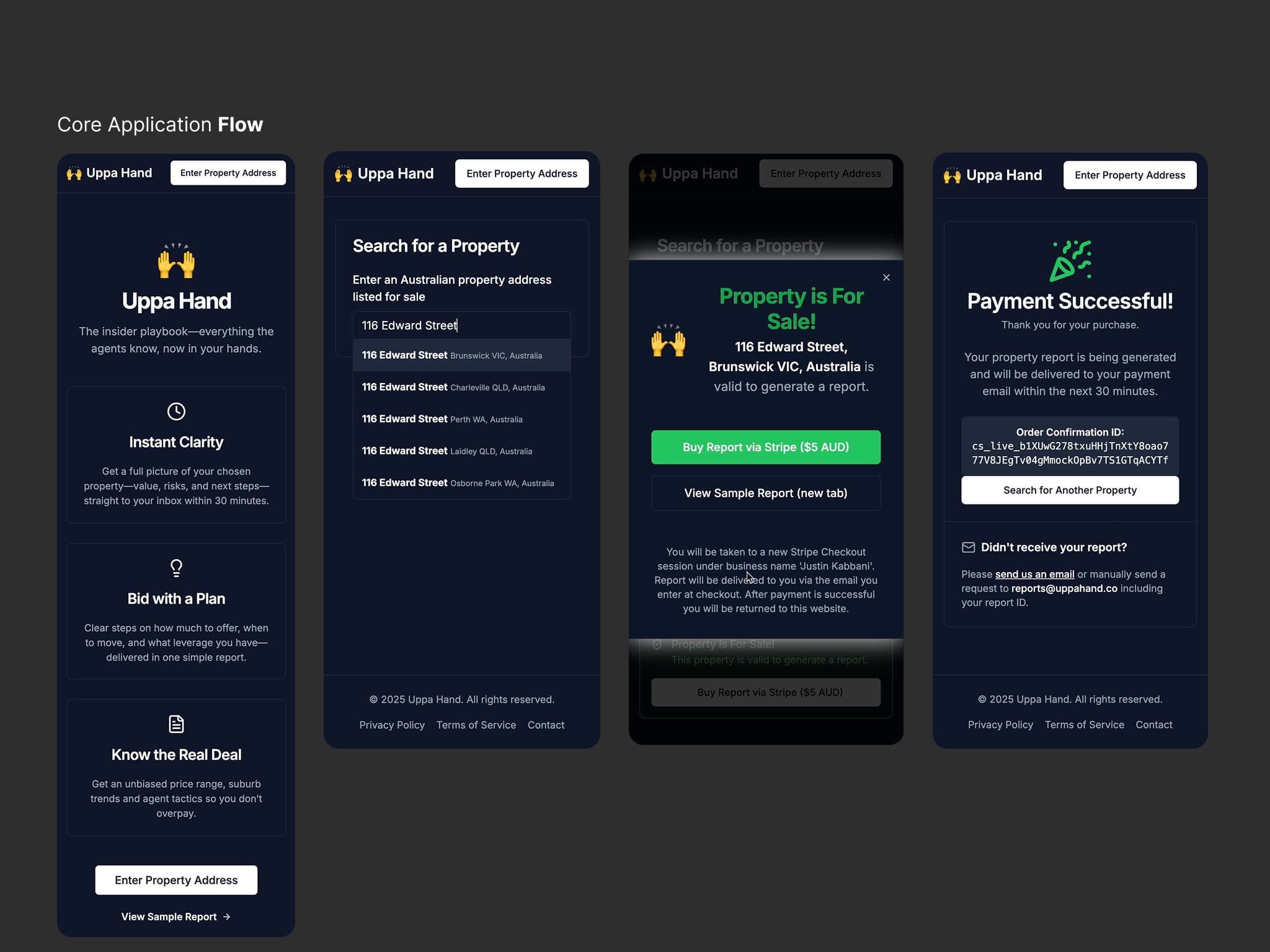

There’s a million ways to over engineer this (though I likely did anyway), so for MVP I focused on:

- 1.Ensuring the property is a valid Australian address

- 2.Ensuring the property is listed for sale online

- 3.Email delivery

- 4.A well researched (many credible sources) report

- 5.An accessible and browsable report

- 6.This implementation skipped the complexity of managing user databases and by validating addresses’ through Google Maps, saved many dollars in expensive-yet-doomed deep research executions.

3. Technology Evaluation & User Journey

The report inspiration was based on output from ChatGPT’s ‘Deep Research’ functionality. After experimenting with the quality of output I could get from staying within Chat-based interfaces, I determined that a single AI model couldn't deliver the required depth without hitting context limitations or degrading in quality. The chosen strategy was to architect a "multi-agent" system in n8n, where each model is treated as a specialist.

The Scout (OpenAI GPT-4o):

Its only job was to perform a quick, surface-level search to gather basic facts. It's fast and cheap for simple data retrieval.

The Investigator (Perplexity Sonar):

This model was tasked with deep, nuanced research, digging into complex topics that require synthesis from multiple sources.

The Creative (Anthropic Claude):

This model's strength is in structured, high-quality prose. Its job was to take the raw research and rewrite it into persuasive, well-formatted content.

The Compiler (Anthropic Claude, again):

A final pass with a highly-instructed AI converted the polished text into clean, semantic HTML, a task it excels at due to its understanding of code and structure.

4. Backend Workflow Development

I used low-code tool n8n to craft a workflow which:

- •Triggers on a successful Stripe payment.

- •Executes a chain of API calls to the different AI models.

- •Parses and transforms the raw research into polished HTML sections.

- •Assembles the final report and uploads it to cloud storage.

- •Sends a polished email to the user with a link to the report.

Neat, huh.

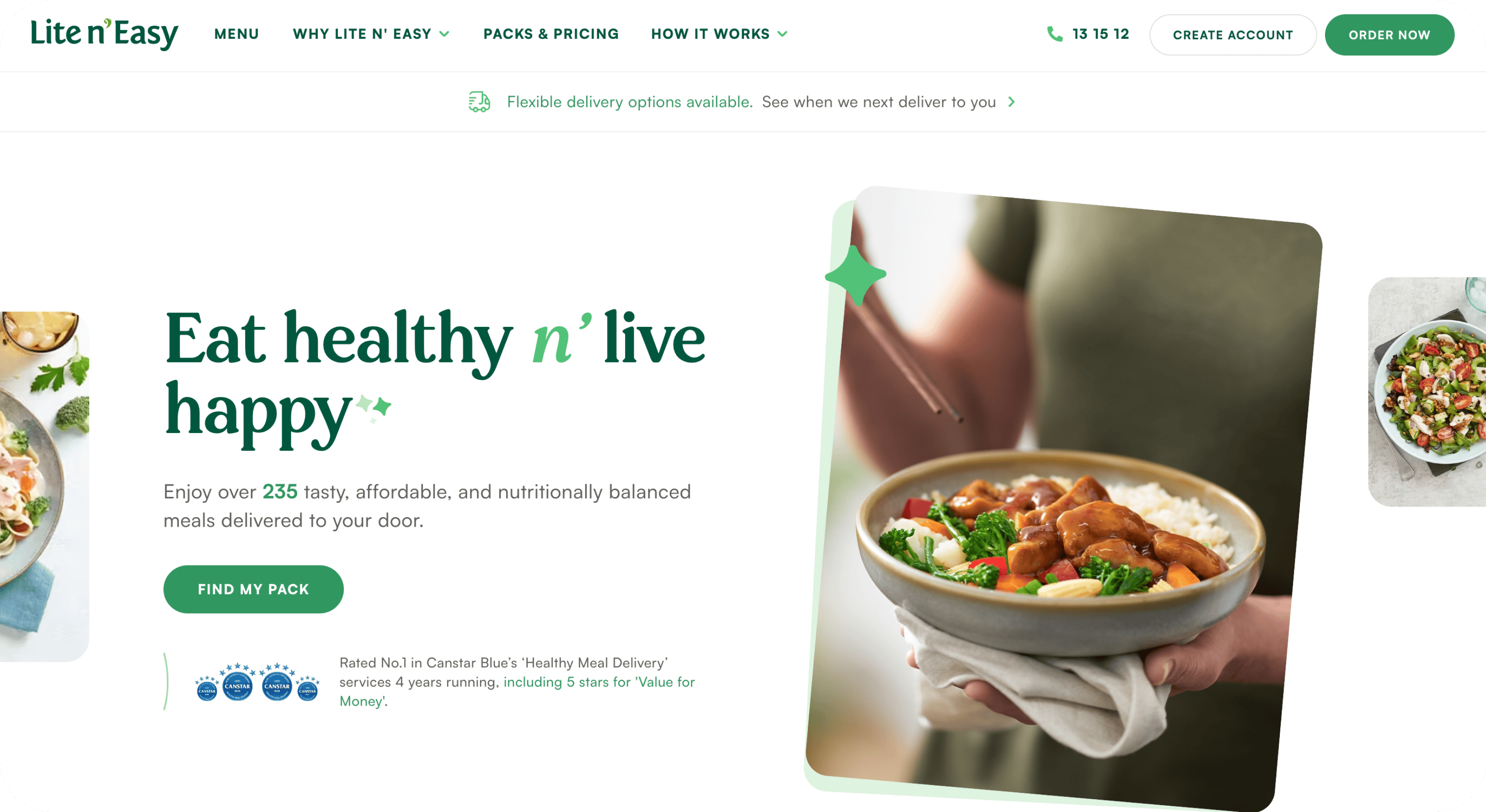

5. Frontend Build

I wanted an experience as frictionless as humanly possible: three clicks and you should be looking at a report. To rapidly test the core user journey, I used v0 to generate a functional React/Next.js application. This served as a high-quality MVP that allowed for extensive iteration (30+ deployments) to refine the UI/UX. When I desired changes, I could mockup in Figma and feed it into v0 to replicate, trusting it will utilise ShadCN & Tailwind frameworks to keep the app stable and snappy.

6. Scalable Delivery

The obvious way to MVP would be to wait for someone to buy a report and then run the workflow myself. However, that doesn’t scale, nor help my understanding of these technologies (goal of the project). For report delivery, I integrated Brevo to ensure transactional emails would reliably land in users' inboxes, avoiding spam filters associated with default cloud domains. Then I integrated Stripe to handle payments, connecting its webhook to the n8n workflow to create a fully automated, end-to-end "purchase-to-delivery" pipeline.

Tech Stack

- •Automation: n8n

- •AI Models: OpenAI 4o/o3, Perplexity Sonar, Anthropic Claude Sonet (via OpenRouter)

- •Front-End: React, Next.js, v0 by Vercel

- •Deployment & Hosting: Vercel, Cloudflare

- •Storage: Cloudflare R2 (S3-Compatible)

- •Payments: Stripe

- •Email: Brevo

- •IDE: Cursor

Learnings / Reflections

Prompting ain’t a product

Yeah, writing good, ambitious prompts is fun (prompt engineering is a million dollar skill, remember?). But prompts don’t pay the rent. What really matters is how you wrap those prompts in an actual system with structure, flow, and fallback plans for when stuff goes sideways (because it does). Reliably and consistently recreating the desired output took 90% of the time in building it. Be prepared to sink in those hours.

APIs are product people’s new ABCs

Everyone's excited about chat interfaces and what it can generate in the browser. That’s where the project inspiration came from. But, the product came together by stitching models and services together quickly via API. Akin to digital IKEA furniture, sometimes the instructions were vastly lacking and the process of figuring it out sucked (sorry for the mean words ChatGPT) - getting comfortable with it is the new skill.

Two little hacks that were helpful:

- 1.By the end of the project I had a bunch of custom GPTs that helped me write the correct request for the payload I envisioned. Massive time saved.

- 2.Using a model aggregator (ie. OpenRouter) saved me learning the intricacies of each lab's structure and standardised my inputs/outputs. Also allowed me switch out models super quick.

Fully alive spec

My static PRD couldn’t keep up with how fast the tech and tasks changed daily. Even with AI assistance, the doc was a pain to update in the chat UI. So I treated my spec like code. Using Cursor, I voice-dictated updates and let an AI assistant version my context docs inline. This vastly helped my brain, cut down my busywork, and reduced the friction of switching mental models between dev, design, prompting, and strategy. If I needed to brief a new model on progress, all I needed was my one document. I can see this system extrapolating well to progress reports, team standups, human-to-human briefing etc. Honestly, I think this way of working is the future of docs.

DevOps is not vibe-able

I honestly thought modern tools would handle the grunt work between my working prototype and putting it live on the web. The hard bit was building the thing, right? Na uh. Even with great tools like v0, there’s still a ton of behind-the-scenes setup just to get things running. When you’ve climbed that mountain, it’s still hard to know how safe and reliable things are. I learned I’m never going to be fluent in this world, and it’s very not my skillset. There’s a huge opportunity for someone to smooth out these workflows for the next gen of non-engineer builders.